AI Black Box: Understanding the Hidden Patterns

Deep underlying regularities in AI reveal predictable trends, empowering informed decision-making.

Topics

NEXTTECH

News

- More than 80% of Saudi CEOs adopted an AI-first approach in 2024, study finds

- UiPath Test Cloud Brings AI-Driven Automation to Software Testing

- Oracle Launches AI Agent Studio for Customizable Enterprise Automation

- VAST Data and NVIDIA Launch Secure, Scalable AI Stack for Enterprises

- How Machine Identity Risks Are Escalating in AI-Powered Enterprises

- How Agentic AI Is Reshaping Healthcare

[Image source: Krishna Prasad/MITSMR Middle East]

We’re experiencing an enormous amount of change in AI, and every day, there’s a new story, and it’s pretty hard to predict what to do with it. At the same time, people are also making enormous bets on AI, putting billions and billions of dollars behind particular ideas.

One might wonder why people are willing to invest billions of dollars in an idea that they consider unpredictable.

The answer is that it’s not unpredictable at all. Some deep regularities mean you can predict what will happen with AI. Understanding those can help you make much better decisions. For instance, demand forecasting in supermarkets is critical. It significantly impacts both customer satisfaction and financial performance. Ensuring the right amount of stock on shelves is essential. Understocking leads to unhappy customers and lost sales, while overstocking results in wasted products and revenue.

Traditional Demand Forecasting

Traditionally, supermarket managers relied heavily on historical sales data, like last year’s sales during the same period and any recent trends indicating increased demand. This method, while straightforward, has limitations. It often fails to account for a myriad of external factors that can influence demand, such as weather conditions, local events, or sudden shifts in consumer preferences.

AI-Powered Demand Forecasting

MIT Lab used AI at a particular store to enhance demand forecasting accuracy. The results showed that prediction error went down by one-third. However, due to the initial development and operational costs, the project was temporarily paused and later resumed with a more measured approach.

This scenario raises important questions: Why were the costs so high, and if the improvements were significant, what justifies these expenditures? To answer these, it’s essential to delve into the complexities of demand forecasting and understand why AI is particularly suited to this task.

AI’s “black box” nature often evokes a sense of mystery and complexity, but breaking it down reveals a structure rooted in mathematical principles and data processing.

The Complexity of Accurate Demand Forecasting

Consider the task of predicting ice cream sales for the next week. At first glance, this might seem straightforward—look at last week’s or last year’s sales data. However, a deeper analysis reveals numerous factors that can influence ice cream demand:

- Competing Products: Launching a new ice cream brand or flavor could divert sales.

- Weather Conditions: A sunny day can significantly boost ice cream sales.

- Local Events: Sports events, especially in the United States, can drive demand for certain products like guacamole. Similarly, local events can influence ice cream sales.

- Health Trends: Current health trends can affect consumer choices.

- Interdependencies: Various factors can interact in complex ways, such as a health trend coinciding with a product recall.

Considering these factors, predicting ice cream sales becomes complex. Each factor could potentially influence demand, and their interdependencies add layers of complexity. Traditional models struggle with this level of complexity, but AI, particularly deep learning, does a much better job.

The Power of Deep Learning

Deep learning, a subset of AI, is particularly adept at handling complex, multi-variable problems. Unlike traditional models that might look at a few variables in isolation, deep learning models can analyze vast amounts of data and identify patterns and relationships that humans might miss. Processing and learning from large datasets allows AI to make more accurate predictions.

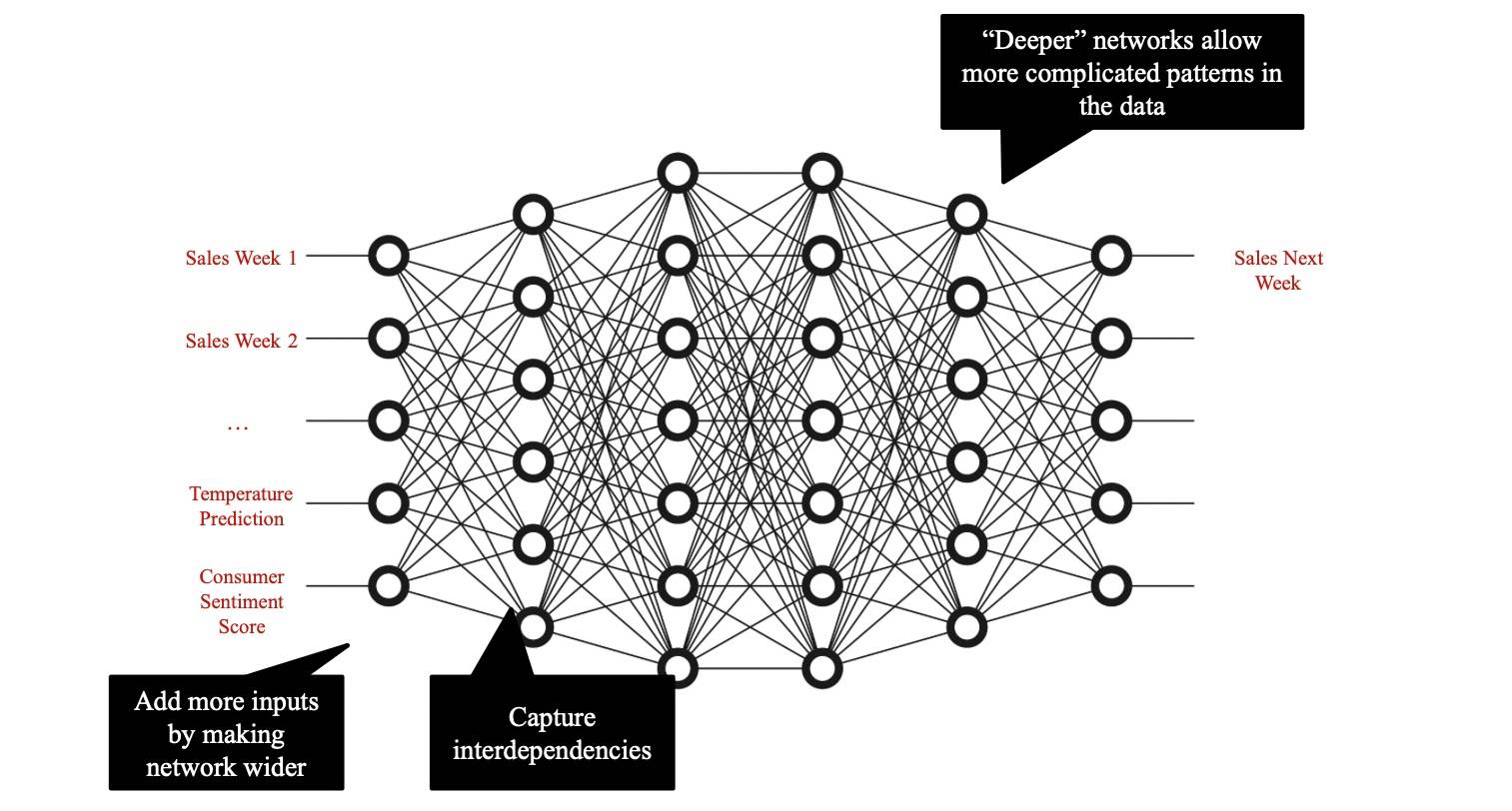

Visualizing the AI Advantage

To illustrate AI’s advantage, consider a stylized graph often used to depict a neural network, the backbone of most modern AI systems. A neural network consists of interconnected layers of nodes (neurons), each layer learning to identify increasingly complex features of the data. This architecture allows the network to model intricate relationships between variables, leading to more accurate predictions.

“Traditional” Deep Learning: Predictions

While the initial costs of developing and implementing AI for demand forecasting can be high, the significant reduction in prediction errors justifies the investment. As supermarkets face the challenges of stocking the right products in the right quantities, AI offers a powerful tool to enhance accuracy, reduce waste, and ultimately improve customer satisfaction. The evolution from traditional models to AI-driven forecasting marks a significant leap forward in retail operations, demonstrating the transformative potential of AI in solving complex, real-world problems.

AI’s “black box” nature often evokes a sense of mystery and complexity, but breaking it down reveals a structure rooted in mathematical principles and data processing. Central to most modern AI, particularly deep learning, are neural networks, which have become powerful tools for various applications. This exploration will delve into the architecture of neural networks, their growth in computational power, and the implications of their expansion.

Neural Network Architecture

A neural network consists of layers of interconnected nodes, each representing inputs (like sales data, temperature predictions, customer sentiment scores, etc.) or outputs (like demand forecasts). Each connection, or line between nodes, signifies a mathematical operation combining inputs to capture interdependencies. The power of neural networks lies in their ability to model complex relationships by stacking multiple layers (deep networks) and including numerous parameters (wide networks).

Key Features of Neural Networks:

- Inputs and Nodes: Basic units representing data points or features.

- Connections and Layers: Mathematical operations combining inputs to form more abstract representations.

- Interdependencies: Critical for understanding trends and making accurate predictions.

Historical Context and Evolution

For many years, neural networks struggled to outperform other methods due to limitations in computational power and data availability. However, as networks became more complex, they began capturing intricate patterns, significantly improving their performance. The breakthrough came with deep learning, which scaled neural networks to new heights.

Neil Thompson, Director – FutureTech Project, MIT Computer Science and AI Lab

ImageNet and Deep Learning Breakthrough:

- ImageNet Dataset: A vast collection of labeled images used to benchmark computer vision algorithms.

- 2012 Milestone: Geoff Hinton’s lab at the University of Toronto won the ImageNet competition using deep learning, marking the start of a new era in AI.

The Scaling Phenomenon

The effectiveness of neural networks heavily depends on their size and the amount of data they process. This scaling has been exponential, with each generation of models requiring vastly more computational resources than its predecessors.

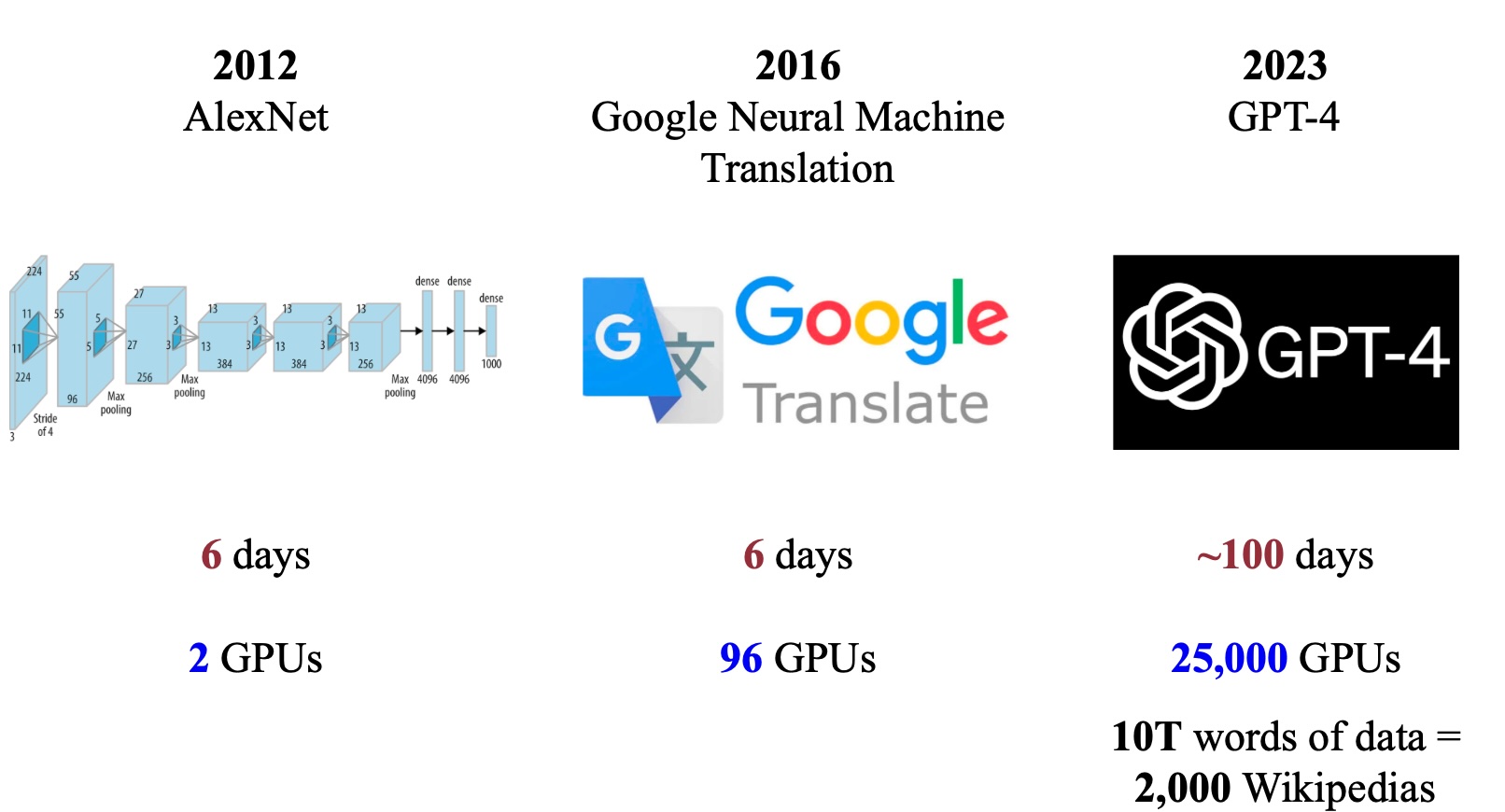

Computational Demands:

- AlexNet (2012): Trained on 2 GPUs for 6 days.

- Google Neural Translation (2016): Trained on nearly 100 GPUs for 6 days.

- GPT-4: Trained for 100 days on 25,000 GPUs, representing a significant leap in computational requirements.

Economic and Environmental Implications

The massive computational power required for training state-of-the-art models translates into substantial monetary and environmental costs. To give some sense of the scale of data GPT-4 was trained on, it’s estimated to be around 10 trillion words of data, roughly equivalent to 2,000 Wikipedias. The scale of this data is enormous, and the monetary investments can be significantly high.

For instance, the training cost of AlphaGo, which was developed a while ago, was $35 million. Training more recent models like GPT-4 costs up to $100 million and has significant carbon footprints. Future AI models, as predicted by companies like Anthropic, may cost up to $10 billion.

The Blessings of Scale

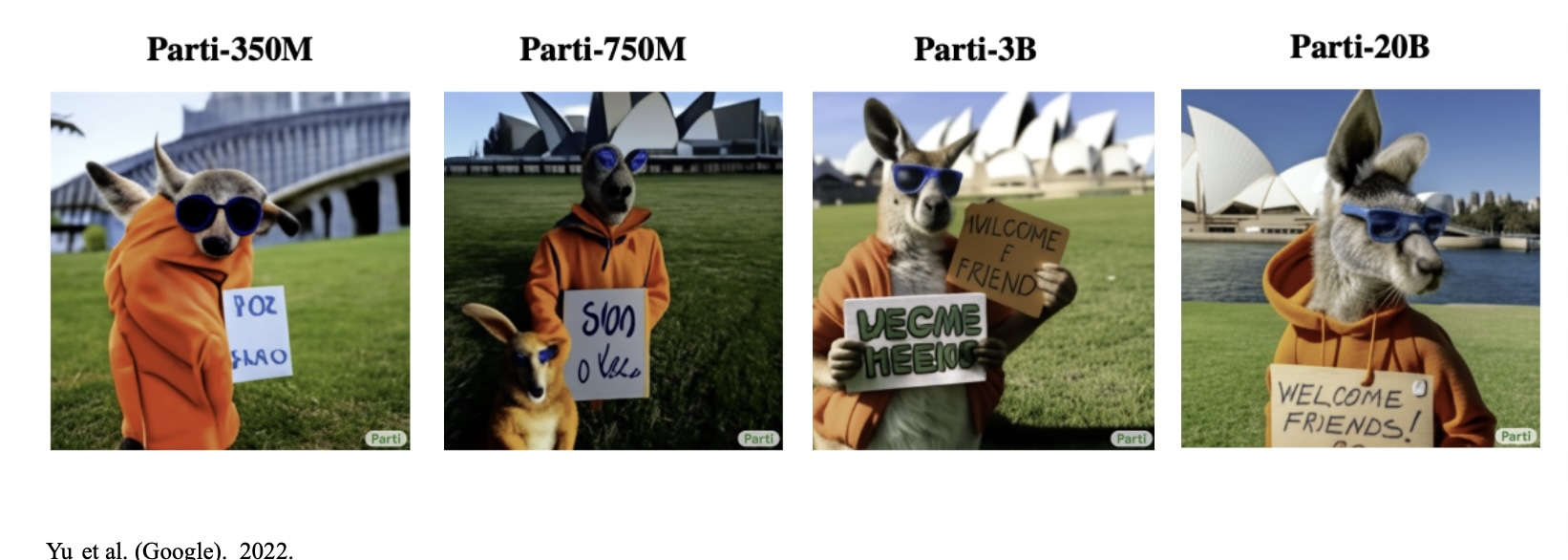

The concept of “the blessings of scale” is crucial to understanding why such investments are made. A prime example is a Google project that generated images from textual descriptions. The input text described a quirky scenario: “a portrait photo of a kangaroo wearing an orange hoodie and blue sunglasses standing on the grass in front of the Sydney Opera House holding a sign on the chest that says, welcome friends.”

When trained with a smaller network of 350 million parameters, the resulting image was far from accurate. However, the generated image became increasingly accurate as the network size increased to 750 million, 3 billion, and finally 20 billion parameters. The larger networks captured more intricate details and dependencies, demonstrating the power of scale in improving model performance.

Blessings of scale

This is a portrait photo of a kangaroo wearing an orange hoodie and blue sunglasses standing on the grass in front of the Sydney Opera House holding a sign on its chest that says, welcome friends.

Recognizing the benefits of scaling up, companies are willing to invest heavily in AI. Sam Altman, CEO of OpenAI, has even sought trillions of dollars to build new chip factories, anticipating the need for a vast number of chips to train future models. This scale of investment is driven by the necessity to continually enhance AI capabilities. However, organizations must consider the significant economic and environmental challenges.

The high costs associated with AI development mean that not every application justifies the investment. For instance, predicting the demand for a can of tuna fish does not require the same precision as forecasting the need for perishable items like fresh meat or fish. This differentiation highlights the importance of assessing the cost-benefit ratio of deploying AI in various contexts.

Moreover, the environmental impact of large-scale AI training cannot be ignored. The energy consumption and carbon footprint associated with powering vast data centers and running intensive computations are significant concerns that need to be addressed.

Key Considerations:

- Economic Cost: High monetary investment is needed for hardware and energy.

- Environmental Impact: Significant carbon dioxide emissions, raising concerns about sustainability.

However, advancements in AI hardware, such as more efficient GPUs from companies like Nvidia, and algorithms improvements are likely to drive down the cost of achieving a given level of performance in the future.

Organizations must strategically plan their AI investments, deciding whether to stay at the cutting edge or wait for costs to decrease as technology advances. By understanding these dynamics, businesses and governments can make informed decisions about when and how to integrate AI into their operations.

__________________________________________________

This article is adapted from a keynote address by Neil Thompson, Director – FutureTech Project, Massachusetts Institute of Technology Computer Science & AI Lab, delivered at NextTech Summit on May 29, 2024. It has been edited for clarity and length by Liji Varghese, Associate Editor, MIT SMR Middle East. Boston Consulting Group (BCG) was the Knowledge Partner and AstraTech was the Gold Sponsor for the summit.